In the past week, all the rage (actual hacker rage in some forums) in the already hot area of containers is about forking Docker code, and I have to say, “what the fork?”

“What the Fork?”

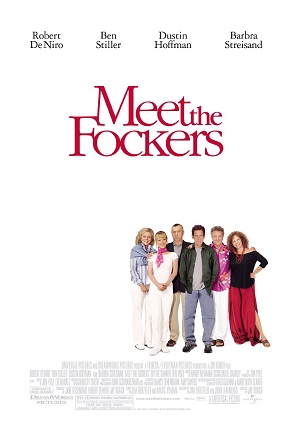

Really!? Maybe it is because I work in IT infrastructure, but I believe standards and consolidation are good and useful...especially “down in the weeds,” so that we can focus on the bigger picture of new tech problems and the apps that directly add value. This blog installment summarizes Docker’s problems of the past year or so, introduces you to the “Fockers” as I call them, and points out an obvious solution that, strangely, very few folks are mentioning.

This reminds me of the debate about overlay networking protocols a few years back – only then we didn’t blow up Twitter doing it. This is a bigger deal than that of overlay protocols however, so my friends in networking may relate this to deciding upon the packet standard (a laugh about the joy of standards). We have IP today, but it wasn’t always so pervasive.

Docker is actually decently pervasive today as far as containers go, so if you’re new to this topic, you might wonder where the problem is. Quick recap… The first thing you should know is that Docker is not one thing, even though we techies talk about it colloquially like this. There’s Docker Inc., Docker’s open source github code and the community and Docker products. Most notably, Docker Engine is usually just “Docker,” and even that is actually a cli tool and a daemon (background process). The core tech that made Docker famous is a packaging, distribution, and execution wrapper over Linux containers that is very developer and ops friendly. It was genius, open source, and everyone fell in love. There were cheers of “lightning in a bottle,” and “VMs are the new legacy!” Even Microsoft has had a whale of a time joining the Docker ecosystem more and more.

Containers and good tech around them has absolutely let tech move faster, transforming CI/CD, devops, cloud computing, and NFV, well…it hasn’t quite hit NFV absolutely yet, but that’s a different blog.

Docker wasn’t perfect, but when they delivered v1.0 (summer 2014), it was very successful. But then something happened. Our darling started to make promises she didn’t keep and worse, she got fat. No not literally, but Docker received a lot more VC funding, and grew organically and with a few acquisitions. This product growth took them away from just running and wrapping containers into orchestration, analytics, networking etc. (Hmm. Reminds me of VMware actually. Was that successful for them? :/) Growing adjacent or outside of core competencies is always difficult for businesses, let alone doing it with the pressure of having taken VC funding and expected to multiply it. At the same time, as Docker started releasing more features and more frequently, quality and performance suffered.

By and large I expect that most folks using Docker, weren’t using it with Docker Inc. nor anyone’s support, but nonetheless, with Docker dissatisfaction, boycotts and breakups starting to surface, in came some alternatives: fairly new entrants Core OS and Rancher, with more support from other traditional Linux vendors Red Hat (Project Atomic) and Canonical (LXD).

Containers, being fundamentally enabled by the Linux kernel, all Linux vendors have some stake in seeing that containers remain successful and proliferate. Enter OCP – wait that name is taken – OCI, I mean OCI. OCI aims to standardize a container specification: What goes in the container “packet” both as an on-disk and portable file format and what it means to a runtime. If we can all agree on that, then we can innovate around it. There is also a reference implementation, runC, donated by Docker, and I believe that as of Docker Engine v1.11 it adheres to the specification. However, according to the OCI site, the spec is still in progress, which I interpret as a moving target.

You may imagine the differing opinions at the OCI table (and thrown across blogs and Twitter – more below if you’re in the mood for some tech drama) and otherwise just the slow progress has some folks wanting to fork Docker so we can quickly get to a standard.

But wait there’s more! In Docker v1.12, near-released and announced at DockerCon June 2016, Docker Inc. refactored their largest tool, called Swarm, into the Docker Engine core product. When Docker made acquisitions like SocketPlane that competed with the container ecosystem, it stepped on the toes of other vendors, but they have a marketing mantra to prove they’re not at all malevolent: “Batteries included, but replaceable.” Ok fine we’ll overlook some indiscretions. But what about bundling the Swarm orchestration tools with Docker Engine? Those aren’t batteries. That’s bundling an airline with the sale of the airplanes.

Swarm is competing with Kubernetes, Mesosphere and Nomad for container orchestration (BTW. Kubernetes and Kubernetes-based PaaSs are the most popular currently), and this Docker move appears to be a ploy to force feed Docker users with Swarm whether they want it or not. Of course they don’t have to use it, but it is grossly excessive to have around if not used, not to mention detrimental to quality, time-to-deploy, and security. For many techies, aside from being too smart to have the Swarm pulled over their eyes, this was the last straw for another technical reason: the philosophy that decomposition into simple building blocks is good, and solutions should be loosely coupled integrations of these building blocks with clean, ideally standardized, interfaces.

Meet the Fockers! So, what do you when you are using Docker, but you’re increasingly are at odds with the way that Docker Inc. uses its open source project as a development ground for a monolithic product, a product you mostly don’t want? Fork them!

Especially among hard-core open source infrastructure users, people have a distaste for proprietary appliances, and Docker Engine is starting to look like one. All-in-ones are usually not so all-in-wonderful. That’s because functions built as decomposed units can proceed on their own innovation paths with more focus and thus, more often than not, beat the same function in an all-in-one. Of course, I’m not saying all-in-ones aren’t right for some, but they’re not for those that demand the best, nor for those that want choice to change components instead of getting locked into a monolith.

“All-in-ones are usually not so all-in-wonderful.”

Taking all this into account, all the talk of forking Docker seems bothersome to me for a few reasons.

First of all there are already over 10000 forks of Docker. Hello! Forking on github is click-button easy, and many have done it. As many have said, forking = fragmentation, and makes it harder for those that need to use and support multiple forks or test integrations with them aka the container ecosystem of users and vendors.

Second, creating a fork, someone presumably wants to change their fork (BTW non-techies, that’s the new copy that you now own – thanks for reading). I haven’t seen anybody actually comment on what they would change if they forked the Docker code. All this discussion and no prescription :( Presumably you would fix bugs that affect you or try to migrate fixes that others have developed, including Docker Inc who will obviously continue to develop them. What else would you do? Probably scrap Swarm because most of you (in discussions) seem to be Kubernetes fans (me too). Still, in truth you have to remove and split out a lot more if you really want to decompose this into its basic functions. That’s not trivial.

Third, let’s say there is “the” fork. Not my fork or yours, but the royal fork. Who starts this? Who do you think should own it? Maybe it would be best for Docker if they just did this! They could re-split out Swarm and other tools too.

“Don’t fork, make love. ”

Finally, and my preferred solution. Don’t fork, make love. In seriousness, what I mean is two things:

1. There is a standards body, the OCI. Make sure Docker has a seat at the table, and get on with it! If they’re not willing to play ball, then let it be widely known, and move OCI forward without them. I would think they have the most to lose here, so they would cooperate. The backlash if they didn’t may be unforgiving.

2. There is already a great compliant alternative to Docker today that few are talking about: rkt (pronounced Rocket). This is pioneered by CoreOS, and now supported on many Linux operating systems. rkt is very lightweight. It has no accompanying daemon. It is a single, small, simple function that helps start containers. Therefore, it passes the test of a small decomposed function. Even better, the most likely Fockers are probably not Swarm users, and all other orchestration systems support rkt: Kubernetes’s rktnetes, Mesos’s unified containerizer, and Nomad’s rkt driver. We all have a vote, with our wallet and free choices, so maybe it is time rock it on over to rkt.

In closing, I offer some more ecosystem observations:

In spite of this criticism (much is not mine in fact, but that of others to which I’m witness) I’m a big fan of Docker and its people. I’m not a fan of recent strategy to include Swarm into core however. I believe it goes against the generally accepted principles of computer science and Unix. IMO Docker Engine was already too big pre-v1.12. Take it out, and compete on the battle ground of orchestration on your own merits and find new business models (like Docker Cloud).

I feel like the orchestration piece is important and the momentum is with Kubernetes. I see the orchestration race a little like OpenStack vs. Cloud Stack vs. Eucalyptus 5 years ago where the momentum then was with OpenStack, and today it’s with Kubernetes but for different reasons. It has well-designed and cleanly defined interfaces and abstractions. It is very easy to try and learn. Those who say it is hard to learn, setup and use, what was your experience with OpenStack? Moreover, there are tons of vanilla Kubernetes users; can’t say that about OpenStack. In the OpenStack space, there are OpenStack vendors adding value with support and getting to day-1, but with Kubernetes the vendor space is adding features on top of Kubernetes, moving toward PaaS. That’s a good sign that the Kubernetes scope is about right.

I’ve seen 99% Docker love and 1% anti-Docker sentiment in my own experience. My employer, Juniper, is a Docker partner and uses Docker Engine. We have SDN and switching integrations for Docker Engine, and so personally, my hope is that Docker finds a way through this difficulty by re-evaluating how it is using open source and business together. Open source is not a vehicle to drive business nor shareholder value which is inherently competitive. Open source is a way to drive value to the IT community at large in a way that is intentionally cooperative.

Related:

http://thenewstack.io/docker-fork-talk-split-now-table/

http://thenewstack.io/container-format-dispute-twitter-shows-disparities-docker-community/